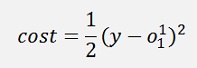

Backpropagation is most often explained using mean square deviation

for the cost function.A more elegant approach, that usually works better, is the use of the maximum log likelihood function for the cost calculation (Logistic regression using max log likelihood for a detailed description)

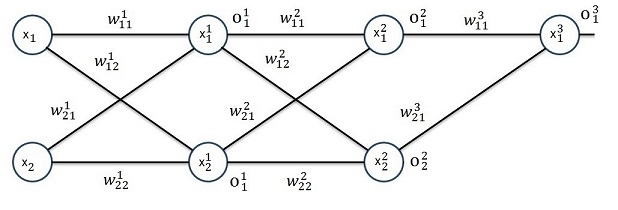

In my article Backpropagation I used a neural net with 3 layers and 2 input features like:

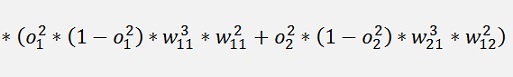

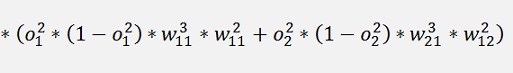

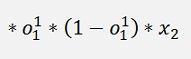

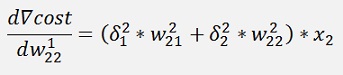

With the mean square deviation the gradients for the learning of this net were:

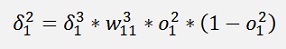

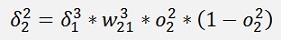

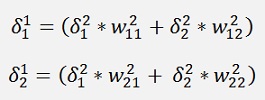

and with the local gradients

and

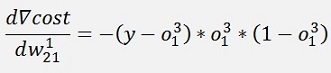

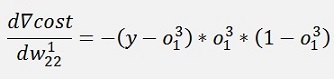

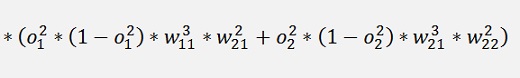

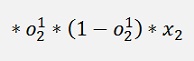

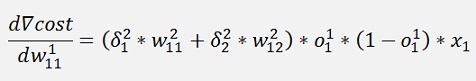

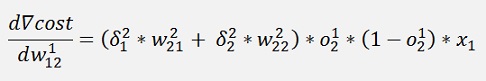

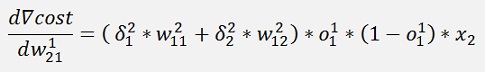

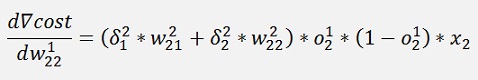

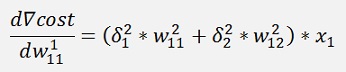

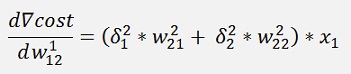

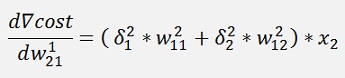

The gradients became:

and

were the local gradients for the first layer.

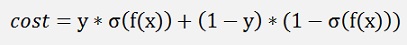

If maximum log likelihood is used as cost function, that means

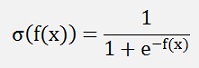

With the sigmoid function

similar to Logistic regression using max log likelihood. Only the f(x) in the sigmoid function is more complex here and there will be more chain rules to be applied for the differentiation of it. But that does not bother as we already have all these things further above.

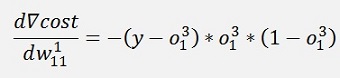

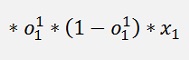

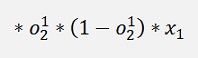

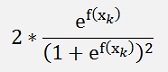

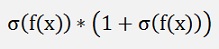

In Logistic regression using max log likelihood we saw that the only difference between the log likelihood and mean square deviation approach was the vanished part

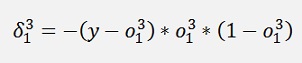

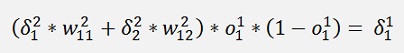

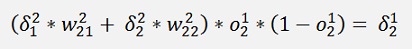

In backpropagation with the max log likelihood the same happens. Only

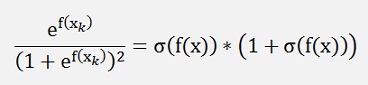

and the part that drops out is

which is basically the outermost part of the differentiation for the local gradient of the last layer (of the mean square deviation approach).

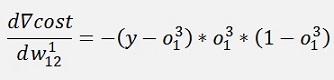

With this the gradient for max log likelihood becomes:

with

For the local gradients of the last layer.

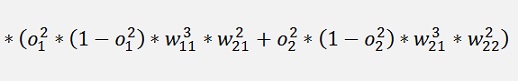

That affects only the last layer. All the layers further left are untouched and remain the same as in the mean square deviation approach.

So there is just one small modification in the BackwardProp() function to modify the Backpropagation algorithm to the maximum log likelihood approach:

Replace

actLayer.gradient[j]

= -(y[j] - actLayer.o[j]) * actLayer.dAct(actLayer.o[j]);

in the first loop by

actLayer.gradient[j] = -(y[j] - actLayer.o[j]);

That’s

private void BackwardProp(double[] x, double[] y)

{

int i, j, k;

if (layers > 1)

{

TLayer actLayer = layer.ElementAt(layers - 2); // actual computed layer

TLayer layerRight = layer.ElementAt(layers - 1); // next layer to the right of the actual one

double[] actX = new double[x.Length];

for (j = 0; j < actLayer.x.Length; j++)

actX[j] = actLayer.o[j];

actLayer = layer.ElementAt(layers - 1);

//last layer

for (j = 0; j < actLayer.featuresOut; j++)

{

costSum = costSum + ((y[j] - actLayer.o[j]) * (y[j] - actLayer.o[j]));

// actLayer.gradient[j] = -(y[j] - actLayer.o[j]) * actLayer.dAct(actLayer.o[j]);

actLayer.gradient[j] = -(y[j] - actLayer.o[j]);

}

for (j = 0; j < actLayer.featuresIn; j++)

{

for (k = 0; k < actLayer.featuresOut; k++)

actLayer.deltaW[j, k] = actLayer.deltaW[j, k] + actLayer.gradient[k] * actX[j];

}

for (j = 0; j < actLayer.featuresOut; j++)

{

actLayer.deltaOffs[j] = actLayer.deltaOffs[j] + actLayer.gradient[j];

}

// all layers except the last one

for (i = layers - 2; i >= 0; i--)

{

actLayer = layer.ElementAt(i);

layerRight = layer.ElementAt(i + 1);

if (i > 0)

{

TLayer layerLeft = layer.ElementAt(i - 1);

for (j = 0; j < layerLeft.o.Length; j++)

actX[j] = layerLeft.o[j];

}

else

{

for (j = 0; j < x.Length; j++)

actX[j] = x[j];

}

for (j = 0; j < actLayer.featuresOut; j++)

{

actLayer.gradient[j] = 0;

for (k = 0; k < layerRight.featuresOut; k++)

actLayer.gradient[j] = actLayer.gradient[j] + (layerRight.gradient[k] * actLayer.dAct(actLayer.o[j]) * layerRight.w[j, k]);

actLayer.deltaOffs[j] = actLayer.deltaOffs[j] + actLayer.gradient[j];

for (k = 0; k < actLayer.featuresIn; k++)

actLayer.deltaW[k, j] = actLayer.deltaW[k, j] + actLayer.gradient[j] * actX[k];

}

}

}

}

All the rest can remain the same.

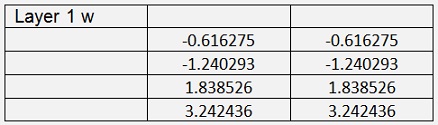

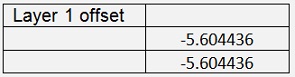

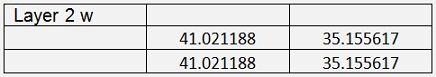

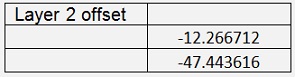

With this and 100000 iterations with learningrate = 0.1 for the first layer and 0.4 for the second the backpropagation algorithm computes the values:

With Cost = 0.0142

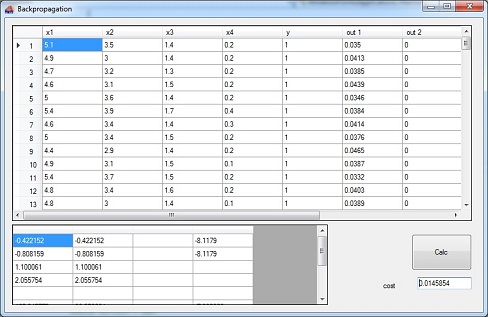

That does not look too much better than 0.015 with the mean square deviation approach in Backpropagation. But if we compare the test application running with these parameters with the test of the mean square deviation approach, there is quite some improvement:

With the maximum log likelihood data the 15 test flowers are recognized with a mean probability of 99.3 %. The mean square deviation approach gets 98.25 % for the same flowers. If we switch these probabilities to uncertainty which is 100 – probability, the mean square deviation approach gets 1.75 % whereas the maximum log likelihood approach gets 0.7 %. That’s quite some difference

The demo project consists of one main window.

In the upper string grid there is the input data and the computed outputs and in the lower string grid there are the trained parameters for w on the left and the offsets on the right side. Pressing